How to use Qt WebAssembly – The Complete Guide with demo

Hey, welcome back to another blog post. Today we’re going to talk about the new Qt WebAssembly. This post will […]

Join us at Qt C++ Warsaw Meetup - 21.08.2025

Sign up for free!

As you know, performance is a critical aspect of any application. If your QML app is slow or unresponsive, users will quickly lose interest and move on to other alternatives. Therefore, it’s essential to understand how to optimize your app’s performance and make it as fast and responsive as possible.

In the previous post about Improving performance and optimizing QML apps, we talked about why optimizing your project is important from a business perspective while also we took a look at the benefits of improving efficiency and speed by moving your logic from JavaScript to C++. We also talked about efficient delegate management using model interfaces provided by Qt. As the latter topic already touched the UI field let’s take discuss more related topics which can help you improve the performance of a QML app. So, let’s dive in!

See what our experts can realize for you regarding Qt Development services.

Creating parts of the UI dynamically is a crucial part of making your user-facing application efficient. The main idea behind this approach is to keep the number of objects living in the memory to a minimum. To fix this issue we can instantiate parts of the UI on demand, based on what the user needs at the moment. Let me describe what techniques you can use to do that easily:

The first technique is a general one, so it wouldn’t necessarily tell how to load UI but rather when to do that. Whenever you add a new page, complex prompt or any other beefy element of the UI you should always ask yourself “Do I need it in memory while it is not visible?”. Although unused objects can be invisible to the user or not rendered, they can still trigger unnecessary logic like constantly updating their property bindings. Keeping unused portions of the UI increases the memory footprint of the application too.

The rule of thumb here is instantiating only the UI objects which are currently visible by the user and are not used in any other way at the moment. That can include various elements like subpages, popups or panels. Of course, this should be taken with a grain of salt as I do not take any kind of non-visual items (like Timers or Connections) into consideration as well as critical parts of user interfaces. If there cannot be any compromise in time between requesting the UI element by the user and displaying it, keeping such an object instantiated in the memory throughout the runtime is still a valid approach. However, for all non-essential items, we should consider loading them on demand.

A common case where you can notice a huge performance difference in favour of loading objects on demand is changing the locale of a multi-language application which uses `qsTr` function for translating different parts of the UI. Such a scenario requires loading a new .qm file using QTranslator, installing it and invoking retranslate method from QML engine. As it refreshes all binding expressions that use strings marked for translation, the more objects with translatable strings you have instantiated the longer it will take.

Notice that such reevaluation will not only affect displayed strings themselves – all of the bindings using the translatable strings will be updated. On top of that, all objects whose size is calculated based on the content size will also need to reevaluate their dimensions if they contain any string that was re-translated. Unloading unused parts of the UI will lead to lowering the number of bindings which need to be reevaluated causing the translations to be updated much faster.

Assuming we now know what parts of the UI we should avoid instantiating right away and why we should do that, let’s focus on how we can create such objects dynamically.

The two most common ways to implement dynamic creation of the parts of the UI is using a StackView or Loaders. While creating objects on demand we need to take into consideration that creating the object might take some time if it is complex. Pushing complex items to a StackView can block the main thread at the time of creation. This is why using loaders is still relevant as they can incubate objects, if the `asynchronous` property is set to true, preventing blocking the UI thread.

QML file

StackView {

id: stack

anchors {

top: parent.top

bottom: parent.bottom

left: parent.left

right: divider.left

}

Button {

id: stackButton

anchors {

bottom: parent.bottom

horizontalCenter: parent.horizontalCenter

}

text: "Push to stack"

onClicked: {

console.time("Stack")

stack.push(nastyComponent)

console.timeEnd("Stack")

}

}

}

Loader {

id: loader

anchors {

top: parent.top

bottom: parent.bottom

left: divider.right

right: parent.right

}

asynchronous: true

active: false

sourceComponent: nastyComponent

Button {

id: loaderButton

anchors {

bottom: parent.bottom

horizontalCenter: parent.horizontalCenter

}

text: "Load component"

onClicked: {

console.time("Loader")

loader.active = true

console.timeEnd("Loader")

}

}

}

Component {

id: nastyComponent

Flickable {

contentHeight: grid.height

Grid {

id: grid

Repeater {

model: 100

delegate: Image {

height: 50

fillMode: Image.PreserveAspectFit

source: "https://upload.wikimedia.org/wikipedia/commons/0/0b/Qt_logo_2016.svg"

sourceSize {

width: 578

height: 424

}

Component.onCompleted: {

let millis = 20

var date = Date.now();

var curDate = null;

do {

curDate = Date.now();

} while (curDate-date < millis);

}

}

}

}

}

}

How long loading will take after triggering the code shown in the QML file above? The value displayed in the logs can be surprising for some people:

| Instantiate Request Time Benchmark | |

|---|---|

| Approach used | Time to finish instantiate request [ms] |

| StackView | 2002 |

| Loader | 0* |

*Why does pushing to stack take that long while the functionalities used after triggering the loader work instantly? This is because we used an asynchronous loader which starts instantiating source components off the main thread. This causes the rest of the GUI not to be blocked so other logic can be handled – in this case, the timer will be stopped right after it was started. Don’t let it deceive you, the item is not instantiated instantly, but simply triggering the loader does not block the rest of the logic so we proceed with other instructions. What is the timing for fully instantiating the component? After modifying the code we can see the following results:

| Instantiate Full Component Time Benchmark | |

|---|---|

| Approach used | Time to instantiate full component [ms] |

| StackView | 2002 |

| Loader | 2004 |

In reality, creating the nasty component via Loader will still take almost the same amount of time as it takes for the StackView. That might not look impressive at first glance but it is extremely useful. During the loading time, when the asynchronous loader is instantiating the object, we can still handle any other logic or simply show some progress indicator. On top of that users can still use and interact with the rest of the User Interface so we could allow them to use other functionalities of the app while the complex element is being loaded.

We are now armed with knowledge about few general techniques for keeping the performance of the UI side. What do you think about learning other specific elements of the Qt framework we can use to improve the performance of our application?

One of the functions that might not be really known in Qt.callLater(). All this function does is allowing to eliminate redundant calls to a function or signal. It is done by executing call to the function once the QML engine returns to the event loop, but executing it only once per event loop iteration if the set of arguments is the same. This function is particularly useful when you want to avoid executing the same function multiple times in quick succession, which could lead to an unnecessary overhead or unintended behavior. Let’s take a look at how to use `Qt.callLater()` in practice:

Item {

id: example

property int callLaterCounter: 0

property int counter: 0

readonly property int executionTargetCount: 5

function callLaterExample() {

for(let x = 0; x < example.executionTargetCount; x++) {

example.foo()

}

for(let y = 0; y < example.executionTargetCount; y++) {

Qt.callLater(example.bar)

}

console.log("Counter: " + example.counter)

Qt.callLater(()=>{console.log("CallLaterCounter: " + example.callLaterCounter)})

}

function foo() {

console.log("Doing a thing!")

example.counter++

}

function bar() {

console.log("Need to be executed once!")

example.callLaterCounter++

}

Component.onCompleted: {

example.callLaterExample()

}

}

In this example, we have two functions `foo()` and `bar()`. Both of the functions are triggered multiple times in a for loop but `bar()` is supposed to be launched only once in this rapid succession. We can imagine that the `bar()` could be responsible for some resource heavy operations which would not give any benefit if triggered multiple times in such a short time.

After executing the example we can see the following messages in the logs:

qml: Doing a thing! qml: Doing a thing! qml: Doing a thing! qml: Doing a thing! qml: Doing a thing! qml: Counter: 5 qml: Need to be executed once! qml: CallLaterCounter: 1

We can clearly see that although the `Qt.callLater()` was triggered multiple times the `bar()` function was executed only once, so the reduction of calls fulfilled its main purpose. You could also notice that we put an arrow function as a parameter with a `console.log` but you could also pass a JS function object. It was done to print the current value of `callLaterCounter`. If we tried to print it immediately it would not be updated as the `bar` would not yet be executed.

You can also pass additional arguments to Qt.callLater(). These arguments will be passed on to the function invoked. However, you need to keep in mind that if redundant calls are eliminated, only the last set of arguments will be passed to the function.

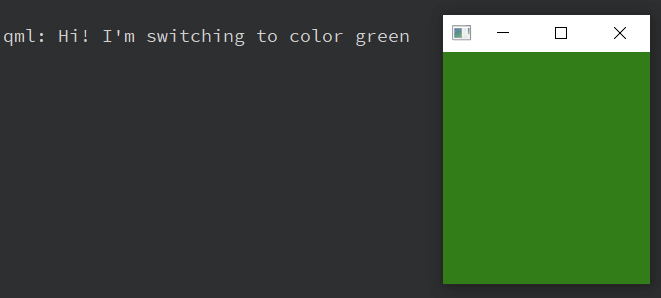

Rectangle {

id: backgroundRect

width: 200

height: 200

color: "red"

MouseArea {

anchors.fill: parent

onClicked: {

// Assume multiple clicks happen in quick succession

Qt.callLater(backgroundRect.changeBackgroundColor, "yellow");

Qt.callLater(backgroundRect.changeBackgroundColor, "blue");

Qt.callLater(backgroundRect.changeBackgroundColor, "green");

}

}

function changeBackgroundColor(newColor) {

console.log("Hi! I'm switching to color " + newColor)

backgroundRect.color = newColor;

}

}

In this example, you can see that we called the `changeBackgroundColor` function wrapped in the `Qt.callLater` three times, but with different colours, however, the rectangle turned from red to green going through yellow and blue.

Like with almost all features there are multiple ways to implement dynamically created and scrollable lists in your application. However, sometimes differences between approaches are not limited to just simplicity or ready-made features, but also can significantly impact performance. One such case is using Flickable with nested Repeater instead of ListView. While both methods can be used to achieve similar results on the surface, there are key differences in how they affect the performance of the application.

ListView is a component that provides a convenient way to display a large number of items in a scrollable list. It allows to define a delegate which is used to visualize entries in the provided model while also being able to interact with the ListView via attached properties. The data model itself can be based on one of many supported types, like ListModel, XmlListModel or custom C++ models that inherit from QAbstractItemModel.

ListView automatically handles the positioning, scrolling and recycling of the instantiated delegates as the user scrolls through the list and items are leaving the viewport. This means that even with large datasets, only a small number of items are kept instantiated at a time.

Another way to display a series of items in a scrollable list, which I’ve seen in the wild, is combining multiple QML types to provide all of the functionalities to create a quasi-ListView. In such a scenario, Flickable is used for its scrolling functionalities which allow users to move through the content that wouldn’t fit on the screen. Although the ListView derives from Flickable, the base type does not provide any functionalities to instantiate objects dynamically nor manage their lifetime.

Objects representing entries in the model are instantiated using Repeater. It populates the Flickable based on the provided QML or C++ model. In this equation, we need to also add a layout handler as both Repeater nor Flickable doesn’t handle the positioning of the children on their own. Because of that, the Repeater is usually wrapped in some kind of a layout like ColumnLayout for vertical lists or RowLayout for horizontal ones.

After putting all of those elements together we have something that looks like a list, is scrollable and represents a model that contains all the data. Everything seems to look fine, changes are committed to the repository and happy developers finish the work for the day. On the next day after turning on the device they were working on, to their surprise, the performance of the application significantly drops when a large data model is provided to the newly created list. Why is that?

While there are scenarios in which using Flickable with Repeater might be useful I consider this an anti-pattern in cases related to creating dynamic list views. The reason for that is caused by Flickable and Repeater not providing any mechanism for managing the lifetime of objects they contain, based on their visibility.

Delegates instantiated by Repeater are not destroyed when they are moved outside the Flickable viewport while scrolling. As they are not destroyed they cannot be loaded on demand when they move into the viewport again. All of the dynamically created objects are instantiated instantly when the Repeater and Flickable are constructed and all of them are kept in the memory through the entire lifetime of their parents. Such behaviour is not that noticeable when the dataset is small and only a few delegates are created, however, the more entries in the model you have the bigger hit performance takes as more objects, bindings, animations and other elements needs to be handled by the engine.

Behavior of the same list implemented using ListView is fundamentally different. As this type provides a system for handling delegate lifecycle based on their position in the viewport, the performance won’t be affected by the number of entries in the model. ListView will instantiate only the delegates that are currently visible in the viewport while also a few delegates that are outside of the viewport as a buffer. As the user scrolls the app new delegates to ensure that the user receives corresponding data while items that are no longer visible and reach the end of the buffer are deconstructed.

Such an approach allows you to keep the number of objects in the memory in check. You can also control how many delegates are loaded into the buffer with the cacheBuffer property.

In conclusion, this blog post explored various techniques to optimize QML performance. We discussed the impact of dynamic UI creation and compared asynchronous Loader and StackView for on-demand object creation. Additionally, we examined how Qt.callLater() can help reduce redundant function calls and improve overall performance. Lastly, we delved into the differences between implementing dynamic lists using ListView and Flickable with a nested Repeater, highlighting respective benefits of the ListView.

I hope that no matter if you are a seasoned QML developer or just starting, this blog post provided you with valuable insights, techniques and examples to help you optimize your app’s performance, select the right tools for the job and create a better user experience. We encourage you to share your feedback or leave a word of encouragement if you liked this post.

Let's face it? It is a challenge to get top Qt QML developers on board. Help yourself and start the collaboration with Scythe Studio - real experts in Qt C++ framework.

Discover our capabilities

Hey, welcome back to another blog post. Today we’re going to talk about the new Qt WebAssembly. This post will […]

Users of embedded devices – from industrial controllers to consumer electronics – are often unaware of hidden vulnerabilities that threaten […]

Graphical user interfaces (GUIs) are becoming more and more important in embedded devices – from home appliances to medical equipment […]